Seven essential elements of production domain analytics success

We have entered the ‘edge’ era in the energy sector—striving for new insights and intelligent actions through automation and machine learning (ML). A consensus is starting to emerge, that asserts, ‘domain analytics is where most success is found’. When domain knowledge combines with data science, they reach a whole new level that each discipline simply couldn’t reach individually.

If we simplify data analytics to “code + data + labels”, we have made significant strides in adding domain knowledge to the code, while neglecting to do so in data engineering (data + labels). The marriage of domain expertise with data engineering magnifies project value and is crucial for scalability.

In this post, I will present seven essential elements that can deliver scalable domain analytics success for production.

1) Ask yourself: is the answer to your problem contained in your data?

Assuming you have clear problem definition and framing, you must assess this question before starting any data science. Electric submersible pump (ESP) failure prediction projects are a very revealing example. I have seen a dozen of these fail because of jumping into ML/AI, when the data contained a small fraction of the answer at best. First you must ask yourself how do ESPs fail? If main failures’ root causes are mechanical and electrical, is the data collected today on your ESPs assets enough? How likely are you to capture a failure-inducing bad lubrication hotspot inside the pump, with downhole pressures and temperatures measured at the bottom of the motor? Is the electrical sampling rate sufficient to assess vibrations and other potential instabilities in the frequency domain? What is the fraction of failure signatures potentially captured within your ESP data?

Always ask yourself how much of the answer to your problem is likely to be contained in your data and if that is matching your project goals.

2) Develop customizable data quality tools

Time series data is central to most production workflows. Typical data quality problems are outliers, frozen, or missing data. This is only the tip of the iceberg. A lot can happen on the data flow chain. Some older analog device telemetry needs specific knowledge to be interpreted. Values coming out of order from a supervisory control and data acquisition (SCADA) system may throw off algorithms built using historical data or requiring strict time ordering. Digitization of production domain knowledge is necessary to differentiate some sensor failures from genuine well performance changes. Over time, even the cleanest data is subject to drift; a good example being reservoir depletion, where an ML model trained a couple of months earlier may incorrectly interpret the physics of a slowly declining downhole pressure measurement, without recalibration from an expert.

Most projects already apply some of these elements, however they are often too specific and not repeatable. When they are commercially available, customizable data quality tools providing generic ingesting, cleansing, signal processing, and basic coding for various domain rules, will be a step change in the performance and scalability of production workflows.

3) Generalize the concept of label in your organization

In data science, labelling is the process of annotating and identifying raw data to give it context. The most common label in production is an event: a time series data label with start time, end time and contextualized information. Example of events include a scheduled inspection and maintenance of a separator with dates and related costs, or several hours of abnormal slugging in surface pipeline troubleshooting. The reality is that the industry is already capturing many events in various forms: email exchanges, spreadsheets, databases, screenshots etc. The challenge is that those are largely not reported with the concept of event and potential future use of ML. Data quality evoked above are events themselves. Event based data capturing allowed artificial lift surveillance to evolve from knowing the type and approximate frequency of ESP issues to precise cumulative downtime per event type and automated event detection.

As organizations improve at generalizing the capture of events into their systems, they improve day-to-day operation reporting and unlock the potential of their data with integration to business intelligence and ML.

4) Label your data in context

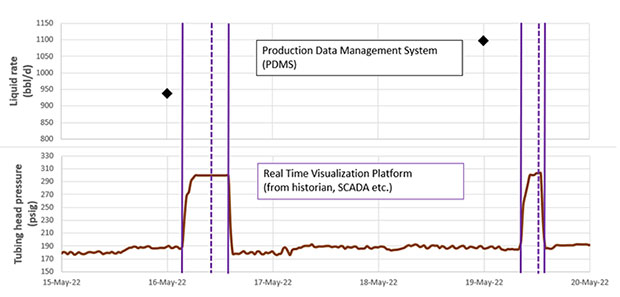

I believe another main reason behind the relative lack of success of ML in production workflows is that data and labels are out of context. While time series data is displayed in ever-improving real-time visualization platforms, labels/events are captured and seen in separate systems like databases or spreadsheets. The best example is operators’ production well test data. Figure 1 is a good representation of how well test average liquid rate is typically recorded with a midnight time stamp into a production data management system (PDMS), which is separate from the real-time visualization system where high-resolution tubing head pressure can inform on precise start/stable/end well test event times. While it’s perfectly fine to enable allocation and other production analysis, it can significantly limit ML enabled automated workflows like well test validation, virtual flow metering, or digital twin calibration and prediction.

This concept generalizes to all production actors. The more they collaborate into capturing events in context of relevant data from other units, the more refined their daily reporting will be, while naturally enabling more advanced workflows.

5) Consider gamification to improve your data workflows

Now why would production engineers dedicate additional time to capture more refined event information? Even if software tools eventually make the process easier and more elegant with labelling in context, it is still a tedious task. Obviously, this shouldn’t be done in the sole purpose of ML, but rather for improving overall business intelligence of a production organization, starting with enhanced data mining. It is common to see experts jump at opportunities to answer questions on enterprise social networks, or surveillance engineers taking pride in being the best event reporter of the month. Leveraging this sense of reward is applicable to each aspect of “code + data + labels”. Developer groups can inspire from sites like Stack Overflow by assisting the community with their technical guidance and earning reputation points through a democratic reward mechanism. Organizations can reward best reporters not based on event capture numbers, but rather cumulative equipment downtime or deferred oil production avoidance, which is enabled when teams discuss and diagnose event details with labels in context.

Gamification is an interesting avenue to explore to streamline domain analytics while bringing immediate value to regular operations.

6) Use scalable model management

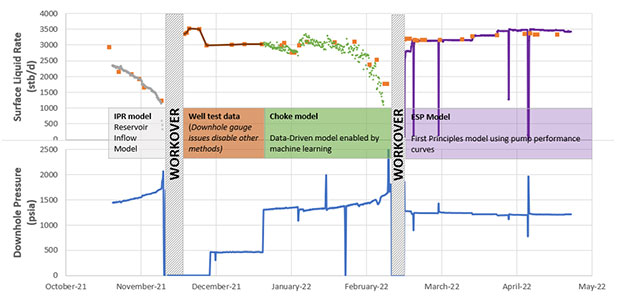

In this section, model refers to a simulator (e.g., multiphase flow, digital twin). We have all seen asset models eventually get out of calibration or become obsolete unless they are continuously maintained by the project engineers that deployed it. This is problematic, since production teams manage hundreds to thousands of assets that continuously change through workovers and interventions during the life of an oilfield. Virtual flow metering (VFM) is a workflow example among many that cannot be scaled without proper model management. VFM models require precise time range validation (much like event data capture) and integration with data quality modules to be beneficial to downstream workflows, like shortfall analysis or allocation. Figure 2 illustrates how a production engineer may choose a combination of physics-based or ML VFM models depending on data availability and completion changes.

The advancement of tools allowing simple and efficient model (re)loading, time range validation or recalculations for the end user, will be the difference-maker in enabling scalability for production asset modeling and its derived automation schemes.

7) Inspire from other industries for user experience

The glue that will hold these software workflows together is user experience (UX). The good news is that the energy industry doesn’t need to reinvent the wheel; there is a plethora of efficient designs already out there in different industries. Starting in house, production can display time series data in a log fashion with annotations (essentially labels in context) just like drilling and logging services do with depth (see what I did with both figures). Finance is using dynamic grids for performance evaluation everywhere. Look at the stock app on your smartphone, it allows you to pick any time range, then select any two time stamps on the graph to give dynamic price evolution. Social media or office collaboration software use #hashtag and @mentions, enabling contextualized and rapid access to information between employees.

Imagine when a production software platform embeds all these elements. It will create a more collaborative environment where production data is continuously enriched as operations unfold, facilitating today’s operations and enabling the workflows of tomorrow.

These essential elements will help bridge the gap between field operations and production engineers. It is primordial that the industry leverages the new generation of tools to allow for the digitization of expert knowledge. This will not only pave the way to automation of oilfield operations, but also free production actors from lower added value tasks so that they dedicate more of their time for advanced production asset and field optimization.